AI Rubber Ducky Pair Programmer

Please try out the extension on the VSCode extension marketplace and let me know what you think! Enter your email to get access:

A few weeks ago I thought I was bothering my coworker by calling him to help debug something too often. In two separate conversations I exclaimed because I knew exactly what my bug was without him having said a word on the call—and felt embarrassed as he laughed at me through the computer screen.

I thought: what if I could create an AI rubber ducky that I could call instead of him?

UX

In my head I wanted to create a UX where I could talk to the rubber ducky and it would both hear me and see the code I was talking about, just like a Zoom call with your coworker.

You can either click on a single line, or highlight multiple lines to send them as context to the model.

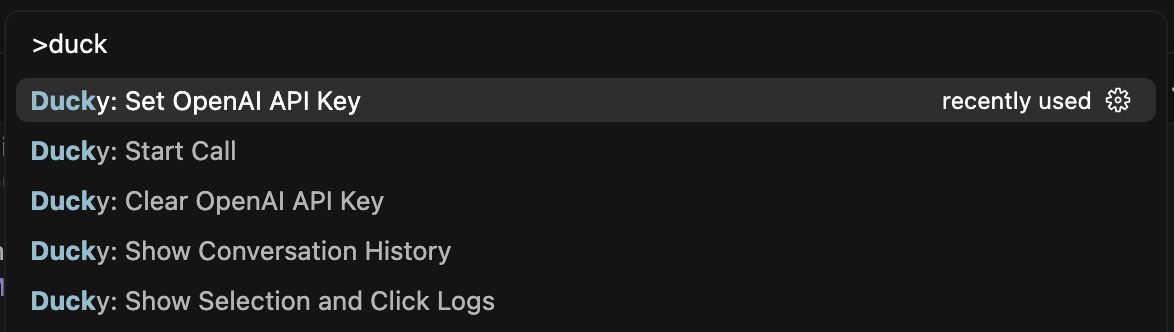

Quickstart

-

BYO-API key

-

Start Call

-

Show Conversation History

User Test

To test if this is useful I created a dummy codebase for training a simple Vision Transformer for classification on a small subset of the CIFAR-100 dataset. I introduced a small bug in the init() of the model:

❌ num_patches = (img_size // patch_size) * (img_size // patch_size - 1)

✅ num_patches = (img_size // patch_size) * (img_size // patch_size)

and asked my friend to use the AI rubber ducky to help him debug what was going wrong.

Why now?

I’m seeing a divergence in the way people write code at Berkeley vs. at my job. Students / entrepreneurs are vibe coding to the max while my coworkers / friends at other companies are using LLM tools but still handwriting their bugfixes & features. I believe this is because production codebases are:

- are about more than just the code i.e. there are business decisions being made outside the repo

- are too large & expensive to feed into a prompt

- will always need to be debugged, whether the code is human or AI generated

I can see a future where there are fewer programmers than there are today, but I believe the paradigm of asking an AI for help getting unstuck before bothering your coworker is here to stay for all knowledge work.

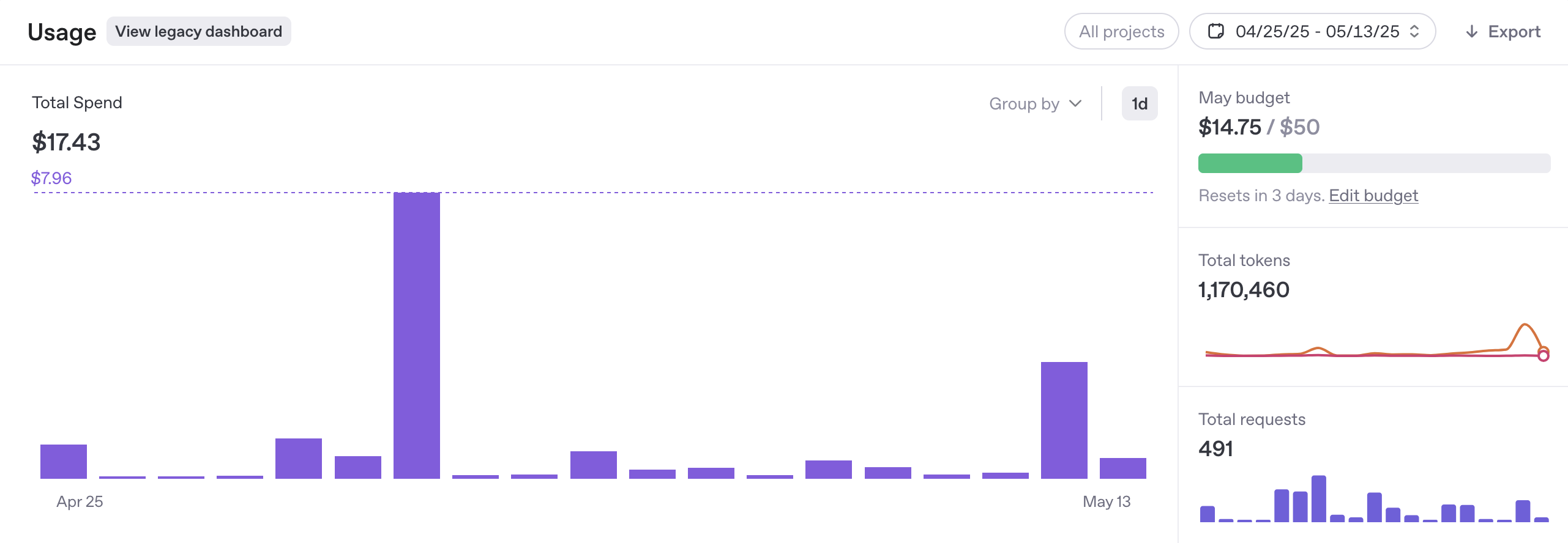

Cost

This entire project cost around ~$40 to make, split evenly between a Cursor subscription and the OpenAI Realtime API. The spike in cost is while using the full 4o model while my friend did a 10 min user test. 4o-mini is around 4x cheaper for audio tokens and 10x cheaper for text tokens.

Future features:

- Integrate with Cursor / GitHub Copilot

- Transcribe user’s voice into chat

- Allow users to modify system prompt for personality

- Ducky learns from past conversations

- Track user file edits

- Let ducky have a cursor

- Visualize debugging attempt paths e.g.

Vision Transformer Debugging Journey

Problem Identified

User is experiencing an error with a Vision Transformer (ViT) model implementation

Initial Exploration

Started exploring model.py, looking at model initialization parameters and forward pass implementation

First Insight

Discovered potential issue with patch calculation:

Key Discovery

Identified critical error in forward pass:

Variable 'cls_tokens' is referenced but 'self.cls_token' is undefined in the model!

Solution

Added missing class token initialization in __init__: